Hi there,

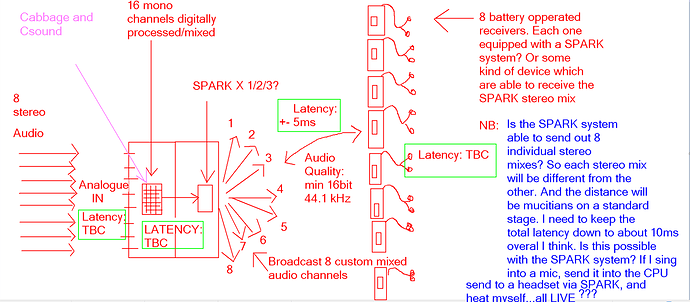

I am trying to design an in-ear monitor system for musicians. In other words, Cabbage/Csound will be used to stream multichannel audio in real-time.

I will be using a Raspberry Pi 4 8GIG. I will also add 16 audio analogue inputs from Texas Audio Instruments. I will make use of a real-time kernel with Linux. The incoming audio will be processed by the Raspberry Pi using Cabbage/Csound and then send over the air via Spark wireless streaming device (see https://www.sparkmicro.com/wp-content/uploads/2020/03/SPARK-Audio-apps-note-v2.5.pdf)

I need a minimum of 8 stereo channel or 16 mono channels. That will allow me to use 8 stereo wireless headsets.

I understand that Cabbage is mainly used for building instruments and synths etc. but the website says that it can be used as a standalone multichannel software…

So I want to use Cabbage to build an app that will let you:

- Sellect inidividual input channels from the analogie inputs

- A basic mixer which will let you mix the incoming audio

- Basic effects, e.g. reverb that can be added to a specific channel on the mixer via the audio patcher

- And then let you output 8 individual stereo mixes to the Spark wireless system or similar

Can someone answer the following questions:

1. Can cabbage support up to 16 channel multichannel audio inputs? That will be 8 stereo channels

2. Can cabbage let you build either a) one instance that will let you select multiple audio inputs and outputs or b) build 8 instances that will run at the same time but with each one able select a specific stereo input channel

3. Let you send that input out again either via analog or something else (I will confirm this with how the Spark chips work)

4. Can Cabbage be used for real-time audio processing for live musicians, that is, as a live monitoring system with basic effects? If I sing into a mic, the signal will travel into the Pi via analog, processed by Cabbage, output by Cabbage via analog or directly to the Spark Chip (tbc)

5. I will have to run Cabbage on a Linux with real-time audio

6. I will have to get the overall processing latency down to 5ms (that is wth 16 mono channels)

Please can someone respond to the above 6 questions. If Cabbage/Csound is not the right software, can I be referred to the correct software.

Thanks

See pic below (purple markup)