Hey all,

Just wondering if any procedural audio veterans would be willing to share any rich sources of information that were particularly enlightening on their journey in learning procedural audio? Books, websites, documentaries or insight that arose from experience, anything at all that informed your perspective and approach

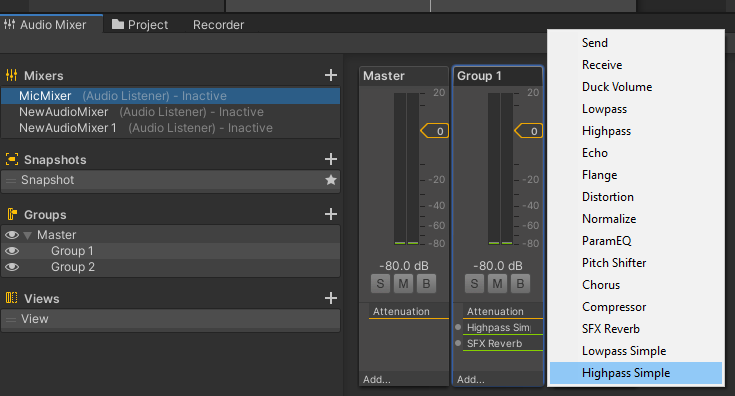

Furthermore, are there many people online creating content for learning procedural audio in the context of Cabbage? I’ve used @rorywalsh’s tutorials which are excellent and very useful indeed, was just wondering if anyone else is out here talking about Cabbage or producing additional learning materials?

I do learn many things from examining the structure/processes included in Cabbage examples, breaking them apart and changing their behaviours etc. But i’d love to hear from anyone talk broadly about their process, why they make the decisions they do when designing sounds, particularly for sounds that mimic the real world, organic timbres such as earth,wind,fire  , metal,glass etc… and other organic sounds such as the human voice.

, metal,glass etc… and other organic sounds such as the human voice.

This is my current reading list:

- Andy Farnell - Designing Sound (Andy Farnells website is indeed amazing and contains lots of audio processing examples i’m looking for, so I may translate some of these processes to Cabbage as an exercise: http://aspress.co.uk/sd/

- Perry R. Cook - Real Sound Synthesis

- Udo Zölzer - DAFX (Digital Audio Effects)

- David Creasey - Audio Processes

Is there some intuition that arises from ear training, coupled with bedrock audio processing knowledge, that you have developed over time which has made it easier to figure out what components you need to create that sound as you hear it, analyse it and decompose it in your mind?

I’m sure every case is different, just curious in the processes of procedural audio enthusiasts when it comes to having a blank Cabbage patch and a sound you want to create. Audio processing can feel like a dark-arts alchemy with all its abstraction, detail and wondrous mystery to a humble noob.

Would love to hear any and all thoughts, just looking for insight