Hello!

I’m trying to achieve the same behaviour of a CsoundUnityChild, but in a dynamic way, meaning that I don’t know the Csound audio channel name in advance. So I want to create multiple instances of the same instrument in different AudioSources, so that I can spatialize them separately but still update their content from the same CsoundUnity instance.

I’m creating each instrument with this C# code:

private void CreateInstrument(int instrNumber)

{

// this creates the GameObject in the 3D world

var instr = Instantiate(_instrumentPrefab, this.transform);

// here I'm saving how many instances have been created, to correctly handle the channel name

if (_instrumentInstanceCounter.ContainsKey(instrNumber))

{

_instrumentInstanceCounter[instrNumber]++;

}

else

{

_instrumentInstanceCounter.Add(instrNumber, 1); // lets start from 1 here, so the channel will be 1.1 aka instr 1 instance 1

}

var key = instrNumber;

var value = _instrumentInstanceCounter[key];

// a single instance will then update two audio channels, left and right

// key is increased by one since the instruments start from 1 in Csound

// we could have used instruments[instrNumber].number

instr.Init(this._csound, $"chan{key + 1}.{value}L", $"chan{key + 1}.{value}R", instruments[instrNumber]);

}

where _instrumentInstanceCounter is a Dictionary<int, int>, and instruments is a List <InstrumentData>, where I store all the parameters I want to pass to the instruments on creation.

This is the InstrumentData struct, and its parameters. I will want to randomize the values of the parameters between min and max.

[Serializable]

public struct InstrumentData

{

public string name;

public int number;

public List<Parameter> parameters;

}

[Serializable]

public struct Parameter

{

public string name;

public float min;

public float max;

}

The important bit is in the init method, where I am passing the strings of the names of the audio channels I want this instrument to write to.

So in my csd each instrument is not outputting directly to the output, but on an audio channel, for example like this:

SchanL = p10

SchanR = p11

chnset a1, SchanL

chnset a2, SchanR

This is the Init method of the class that will act like a CsoundUnityChild:

public void Init(CsoundUnity csound, string chanLeft, string chanRight, InstrumentData data)

{

_chanL = chanLeft;

_chanR = chanRight;

_zerodbfs = csound.Get0dbfs();

_csound = csound;

// creating the buffers that will hold the data from Csound.GetAudioChannel

_bufferL = new double[_csound.GetKsmps()];

_bufferR = new double[_csound.GetKsmps()];

var parameters = new List<string>();

foreach (var p in data.parameters)

{

var value = Random.Range(p.min, p.max);

parameters.Add($"{value}");

}

var score = $"i{data.number} {0} " + string.Join(" ", parameters) + $" \"{chanLeft}\" \"{chanRight}\"";

Debug.Log($"score: {score}");

_csound.SendScoreEvent(score);

_initialized = true;

}

This generates scores like this:

score: i1 0 5 0 11.97545 497.9954 0.415536 8.615604 0.6595364 "chan1.1L" "chan1.1R"

And this is the OnAudioFilterRead method that will write the samples on the newly created Unity AudioSource:

private void OnAudioFilterRead(float[] samples, int numChannels)

{

if (!_initialized) return;

for (int i = 0; i < samples.Length; i += numChannels, _ksmpsIndex++)

{

if ((_ksmpsIndex >= _csound.GetKsmps()) && (_csound.GetKsmps() > 0))

{

_ksmpsIndex = 0;

_bufferL = _csound.GetAudioChannel(_chanL);

_bufferR = _csound.GetAudioChannel(_chanR);

}

for (uint channel = 0; channel < numChannels; channel++)

{

samples[i + channel] = channel == 0 ? (float)_bufferL[_ksmpsIndex] : (float)_bufferR[_ksmpsIndex];

}

}

}

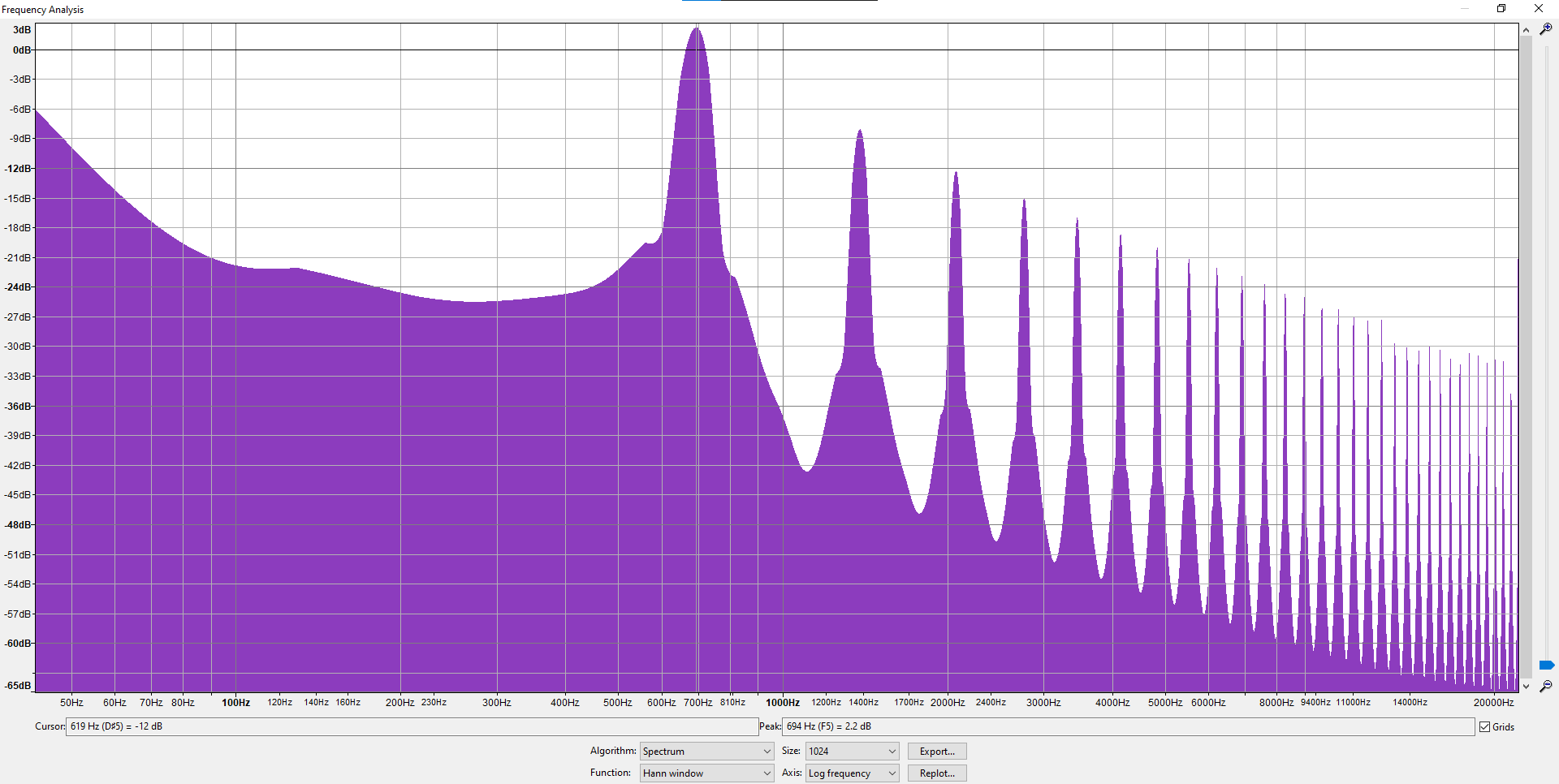

Everything looks correct to me, but it sounds really bad, like this:

This should be 5 seconds of a stereo sine at 440 Hz.

Notice the final part where there shouldn’t be sound since the instrument has stopped playing, but there’s an ugly digital noise instead. So I will have to handle this also, when the instrument stops updating the channel there will be noise/garbage data in the channel, and I shouldn’t update the AudioSource anymore.

I really hope this setup makes sense and it’s not a thread desync issue

Any idea?

P.S. I had to modify the last lines of CsoundUnity.ParseCsdFileForAudioChannels like this:

// discard channels that are not plain strings, since they cannot be interpreted afterwards

// "validChan" vs SinvalidChan

if (!split[1].StartsWith("\"") || !split[1].EndsWith("\"")) continue;

var ach = split[1].Replace('\\', ' ').Replace('\"', ' ').Trim();

if (!locaAudioChannels.Contains(ach))

locaAudioChannels.Add(ach);

because I don’t want those SchanL and SchanR to be recognized as channels, since they are Csound string variables instead

regarding the pool idea, you could compile an instrument a i-time that will insert the channels into any exciting orc. Regarding the 1024 buffer size in unity, are your sure the buffer size is always the same? Many systems end up with variable sized processing blocks, which might explain the noise?

regarding the pool idea, you could compile an instrument a i-time that will insert the channels into any exciting orc. Regarding the 1024 buffer size in unity, are your sure the buffer size is always the same? Many systems end up with variable sized processing blocks, which might explain the noise?