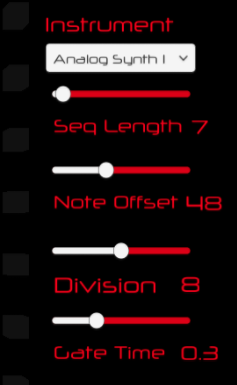

greetings everyone! this is a somewhat involved topic on the subject of using Csound in a Unity based VR oriented 3D music sequencer of sorts. briefly, imagine eight 8 X 8 matrix sequencers stacked up into a cube. triggering always moves from left to right, time is the horizontal axis and pitch is the vertical axis. then rotate the cube data but keep the triggering and note assignments stable.

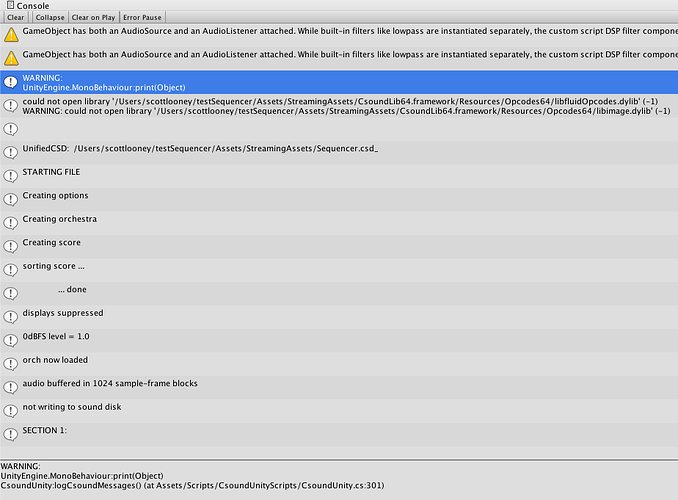

what i’m interested in is two things mainly. first, the ability to have a bank of instruments that i can assign as instruments to these layers. technically it uses MIDI notes but it’s not officially MIDI. i’ve been getting by using PD up until now but it’s been difficult due to my plugin’s limitation on only being able to load a single PD patch - no abstractions, no samples, nothing. frustrating. so looking at Cabbage i see the opportunity to create very high quality instruments that can be played by the sequencer. i would assume this part is fairly trivial to set up in CsoundUnity in terms of sending control and note data to the synth.

the second is more challenging from my perspective. i need to have a really tight timing grid interacting with the environment controlling the sequence event triggering. preferably Squarepusher/Aphex Twin tight. C# is not great for this due to GC, but i’d be curious what could work better. i discovered a thread on the Juce forums where someone wanted to create a Juce audio back end to a Unity app. it was interesting to me as there was talk on creating a Mixer plugin using the C++ plug-in SDK. i might want to mention as an aside and a reply to your post that the newer version of the Mixer Plug In SDK does contain a MIDI-triggered subtractive synthesizer demo as well as some sequence demos, so it’s not all just audio effects at this point, and integration of Cabbage into the SDK might well be something to look at in the near future.

so there we go - virtual synths and samplers, check (it’s mostly a messaging and format issue i’m guessing). sample accurate DSP based timing, not so sure about. i did get a decent-ish timing situation in Standalone mode using OnFilterRead but building on Android the results were laughably chaotic (a demo of this ran on Gear VR), but i am upgrading to Vive quite soon so i may run it on that device. i do like the portability of the GearVR though. also someone needs to amend the Android latency problem to something that reads like ‘horrifically inconsistent latency’ to more accurately reflect my personal experience. anyway, i’m also not the most efficient programmer so there could be other issues at play. i have heard of an Android based low latency library called Superpowered that might be able to help as well, but i want to see what options Cabbage or the Unity Mixer SDK might be able to offer in this regard. any advice appreciated!